SAKET BIVALKAR

Saket’s focus is on helping organisations to become flexible and adaptive, while emphasising that people in the organisation grow as well. His experience includes working with a range of organisations from large, complex global enterprises to small entrepreneurial start-ups.

Auditable, Governed AI Agents: The Next Frontier of Operating Model Transformation

As generative AI moves from experimentation into deployment, enterprises are reaching a critical inflection point.

AI agents, powered by large language models (LLMs), are now embedded across customer service, internal operations, and decision-making systems.

While the potential is significant, the risks are equally real. Without the right operating model, these agents create unmanaged risk, operational opacity, and long-term technical debt.

This article explores why auditability and human governance must become core to enterprise AI adoption, and how leading organizations are adapting.

The Challenge: AI Agents Are Scaling Without Control Structures

Over the past year, AI agents have entered nearly every business function:

- Customer support

- HR operations

- Procurement and vendor management

- Product feedback analysis

- Legal and compliance assistance

These deployments offer clear efficiency gains, but they also expose structural issues.

AI agents are increasingly autonomous. But in many cases, they are operating without proper oversight.

We are already seeing three patterns emerge:

- Hallucinations in production. Agents generate confident but incorrect outputs.

- No audit trail. There is no reliable record of the inputs, prompts, or logic behind the output.

- Unclear accountability. Responsibility for agent behavior is distributed across IT, data science, and business teams, with no clear owner.

This creates fragile systems that are fast-moving but hard to manage.

The Data: 95% of GenAI Pilots Are Failing

A recent MIT study (2025) shows that 95% of enterprise GenAI pilots fail to scale.

One of the top reasons?

Companies avoid friction.

They prioritize speed over structure.

They deploy MVPs without governance.

They treat AI agents like tools, not systems that require management.

The result is short-term output but long-term risk.

The Strategic Imperative: Make Governance a Core Capability

To move beyond the pilot stage, organizations need more than better models.

They need to redesign how agents are built, deployed, and managed across the enterprise.

That shift relies on two critical capabilities:

1. Auditable Agents: From Black Box to Transparent System

LLMs are not inherently transparent.

Auditability must be intentionally designed.

High-performing organizations are building:

→ Structured logs that capture prompts, decisions, actions, and outcomes

→ Explainability layers that show how specific outputs were generated

→ Model update logs to track changes in training data, prompts, and versioning

These are not just technical features.

They are the foundation for risk, compliance, and learning.

2. Human Governance: From Automation to Accountability

As AI agents take on more complex tasks, they need governance structures similar to human decision-makers.

That includes:

→ Ownership models with a named responsible party for each agent

→ Escalation paths that define when agents must defer to humans

→ Risk thresholds based on task sensitivity, such as informational vs. transactional vs. critical actions

Without these structures, enterprises lose visibility and control over how decisions are made.

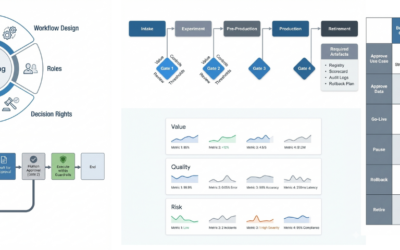

The Path Forward: Redesigning the Operating Model

-

To scale AI agents safely and effectively, leading organizations are making four key operating model changes:

-

Enterprise-wide agent standards

Define a common development, deployment, and risk framework. -

Cross-functional governance boards

Involve legal, product, risk, and operations from day one. -

Continuous feedback loops

Make it easy for users to flag issues and for teams to respond. -

Dynamic governance models

Adjust oversight mechanisms as agents increase in complexity or autonomy.

-

Conclusion: Governance Is the Enabler of Scalable AI

The real measure of AI maturity is not how many agents a company deploys. It is how well those agents are governed, monitored, and evolved over time.

The companies leading this space are not just shipping faster.

They are building systems that scale with control, not chaos.

Governance is not a bottleneck. It is the foundation.

For any organization serious about GenAI, auditability and human oversight must move from optional to non-negotiable.

Operating Model for AI: The Hybrid (Human + AI) Teams Blueprint.

Saket’s focus is on helping organisations to become flexible and adaptive, while emphasising that people in the organisation grow as well. His experience includes working with a range of organisations from large, complex global enterprises to small entrepreneurial...

Purpose-Led Digital Transformation: Why Change So Often Turns Into Chaos

Why most transformations fail and how purpose-led leadership, people-centric design, and digital twins prevent expensive chaos and create lasting change.

Importance of Managing the Change and Also Leading It

Current business environment demands we manage and lead the change. Learn how Versatile Consulting supports its clients with Whole-Scale Change methodology. It combines rigorous management, shared vision, and real-time leadership to drive sustainable transformation.